In space, no one (but AI) can hear you scream

Résumé

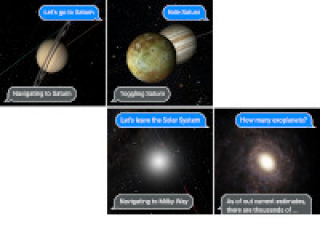

We present our initial work on integrating a conversational agent using a large-language model (LLM) in OpenSpace, to provide conversational-based navigation for astrophysics visualization software. We focus on applications of visualization for education and outreach, where the versatility and intuitiveness of conversational agents can be leveraged to provide engaging and meaningful learning experiences. Visualization benefits from the development of LLMs by leveraging its capability to understand requests in natural language, allowing users to express complex tasks efficiently. Natural Language Interfaces can be combined with more traditional visualization interaction techniques, streamlining real-time interaction and facilitating free data exploration. We thus instructed a voice-controlled GPT-4o LLM to send commands to an OpenSpace instance, effectively providing the LLM with the the ability to steer the visualization software as a museum facilitator would for educational shows. We present our implementation and discuss future possibilities.

Domaines

Informatique [cs]

Fichier principal

Brossier_2024_SNO.pdf (1.42 Mo)

Télécharger le fichier

Brossier_2024_SNO.pdf (1.42 Mo)

Télécharger le fichier

Brossier_2024_SNO.jpg (9.62 Ko)

Télécharger le fichier

Brossier_2024_SNO.jpg (9.62 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|